What is A/B Testing?

A/B testing (or split testing) is a method of comparing two versions of a webpage or app against each other to determine which one performs better.

The process works something like this:

First somebody comes up with an idea, which is called a hypothesis. In order to run an A/B test on a hypothesis it should be framed in a specific format.

- If we [insert idea here] then [insert the expected outcome here] because [insert why you think the outcome will happen here].

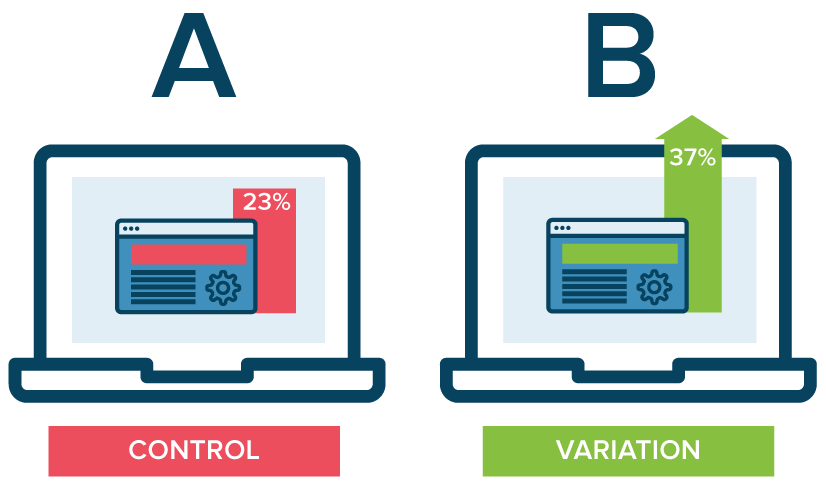

For example, If we change the next page button colour from red to green then average pages per session will increase because green is a more inviting colour than red and users will click it more.

Since the hypothesis is usually just a hunch, we can test this theory to see whether it actually increases the pages per visit. If we setup the test correctly the resulting data should answer this question for us. Using the example above, we can modify our web pages to create 2 variations of an article. We can then compare variant A with a red button to variant B with a green button. We can set the goal of the test to measure pages per visit, or use a simpler metric like clicks on the button.

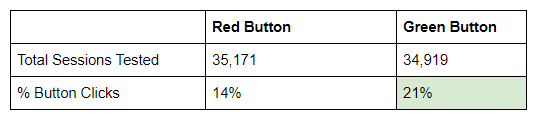

Next we determine what and how much we want to test. If the test can be run against any article, we can pick a portion of our site visitors to be included in the test (ie. 10% of the site traffic would see the test). Of the 10% who see the test, we split the visitors evenly and randomly into the variations (5% would see the green button and 5% would see the red button). After running the test and collecting data, we look at the test result and decide whether we should implement the idea in production. A sample result might look like this:

In this example, the hypothesis proved to be correct and the Green button resulted in more clicks, so we would change the entire site to use green buttons instead of red.

How are we using it at Shared?

At Shared we recently started using a tool called Google Optimize which makes it easy to modify the html on a site and capture the test results. So far we have run about 5 tests, and we will be sharing the results of these as Buzzkill Insights shortly. We would invite anyone with ideas (hypotheses) to test to send them to Darwin, Kaela or Trevor on the development team.

Our goal is to run tests constantly, to keep improving the experience for our audience and at the same time optimizing our revenue. Before we make any changes to any of our site layout/ ad placement/ page flow, we can always confirm our hypothesis with real data. It helps us to make data-driven decisions. One of our goals is to provide a customized experience for each of our visitors and we can achieve that by using A/B testing to find the optimized page layout/ page flow for different audiences.